November 4, 2021

SundaeSwap Scalability

Sundae Labs Team

Much ink has been spilled in recent weeks about scalability on Cardano; we wrote an article about the trade-offs IOG had made affecting scalability, and several projects have posted about their architectural solutions to offset some of these tradeoffs. Now as SundaeSwap is approaching its public testnet and subsequent launch, we thought that now would be a good time to crack open the hood on our protocol architecture and the journey we took to get there.

We evaluated a number of solutions in depth, and some of these bear a resemblance to those being discussed elsewhere in the Cardano ecosystem. While each protocol has their own set of criteria and trade-offs that they choose to prioritize, we concluded that many approaches had at least one serious flaw that ultimately caused us to reject them.

Since it would be difficult (and uninteresting) to give each of these proposed solutions the same depth of treatment as our internal evaluation, the first section of this article will provide the 50,000-foot view of the solutions and criteria we used to evaluate them.

Subsequent sections will briefly describe how each protocol is intended to work, plus what we consider to be the fatal flaw(s) that disqualified the solution for the SundaeSwap protocol.

Finally, we will spend the remainder of this article discussing the solution we did settle on, plus some hints for future ongoing research to extend the protocol.

Overview

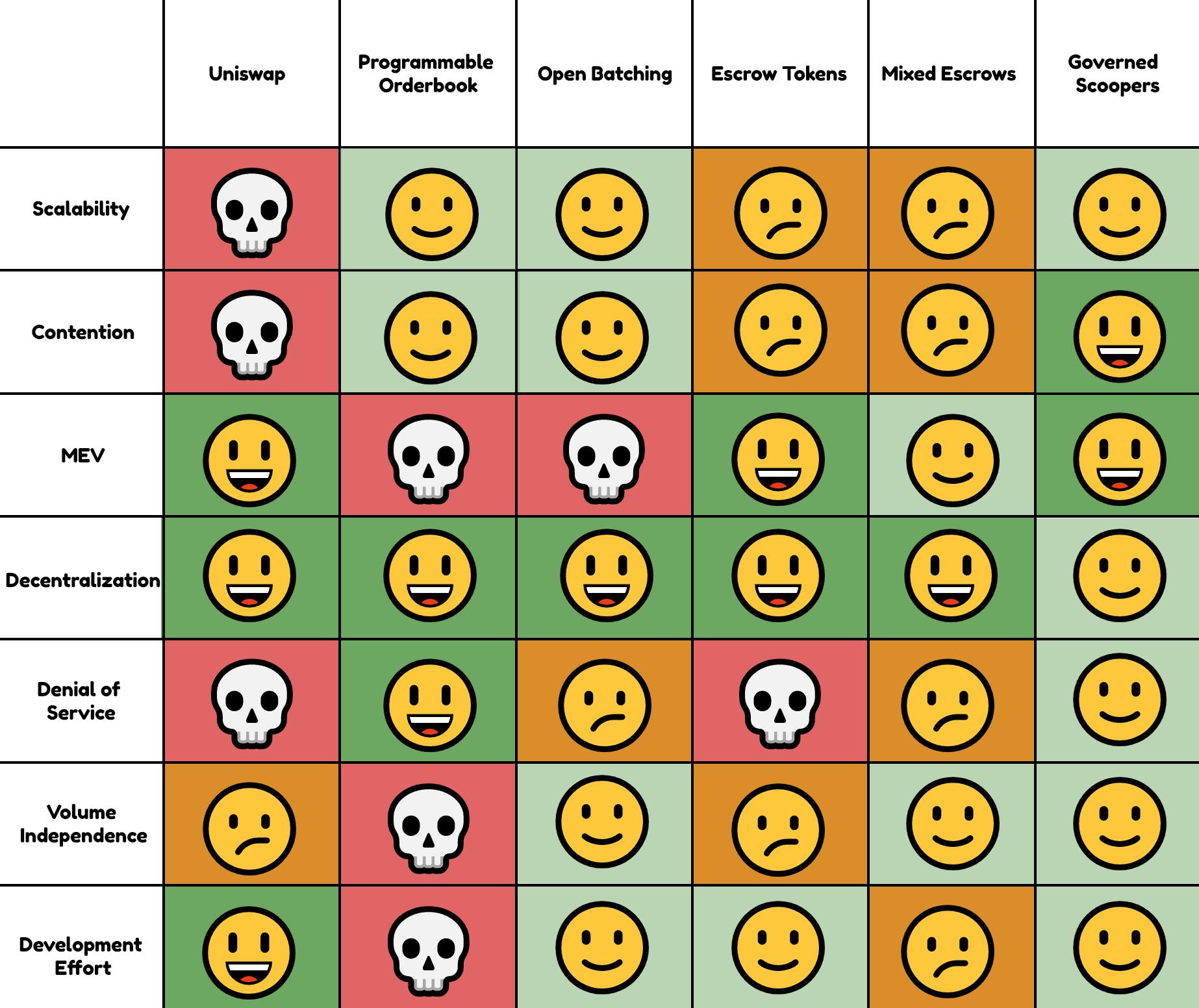

The SundaeSwap team has invested an incredible amount of effort evaluating different solutions. As mentioned, it would take far too long to describe each deliberation in detail, but we wanted to at least provide the high-level feature matrix that came out of our evaluations.

A non-exhaustive list of the names we gave internally to the top solutions are:

- Uniswap Clone: A direct port of Uniswap’s logic to Cardano, as described in our whitepaper (mostly just for comparison)

- Open Batching: Allow anyone to aggregate requests for swaps against a pool

- Tokenized Escrows: Issue state-channel tokens to prevent market censorship

- Mixed Escrows: A hybrid of state-channel tokens, for guaranteed order flow, and open orders, for scalability

- Programmable Orderbook: Full order-book model with programmable criteria

Ultimately, we decided on a solution that we’re calling AMM Orderbook, which is discussed in the last section of this article.

We evaluated each of the foregoing solution options against at least the following criteria:

- Scalability: How many users / transactions can the protocol support in a sustained way?

- Contention: How often does an end user have to re-submit their transaction?

- Miner Extractable Value (MEV): How vulnerable is the protocol to market manipulation?

- Decentralization: How robust is the protocol against being halted by a single party?

- Denial of Service: How robust is the protocol against denial of service attacks?

- Volume Independence: How much volume does the protocol need to be useful?

- Development Effort: How much effort is it to implement? How much surface area needs to be audited?

And here is how each design stacked up, in our opinion.

A chart of each design ranked for easy visualization. (Note: these ranking are just opinion and not 100% fact)

Rejected Solutions

This section of the article will describe how each of the above solutions was intended to work, as well as a description of what we consider to be the fatal flaw that made each unsuitable for the protocol we want to build.

Some of these solutions resemble solutions proposed by other projects, and our intention is not to disparage their work. Each has their own trade-offs, and just because something isn’t the right solution for SundaeSwap doesn’t mean that it doesn’t fill an effective niche for a protocol with different prioritization criteria or objectives.

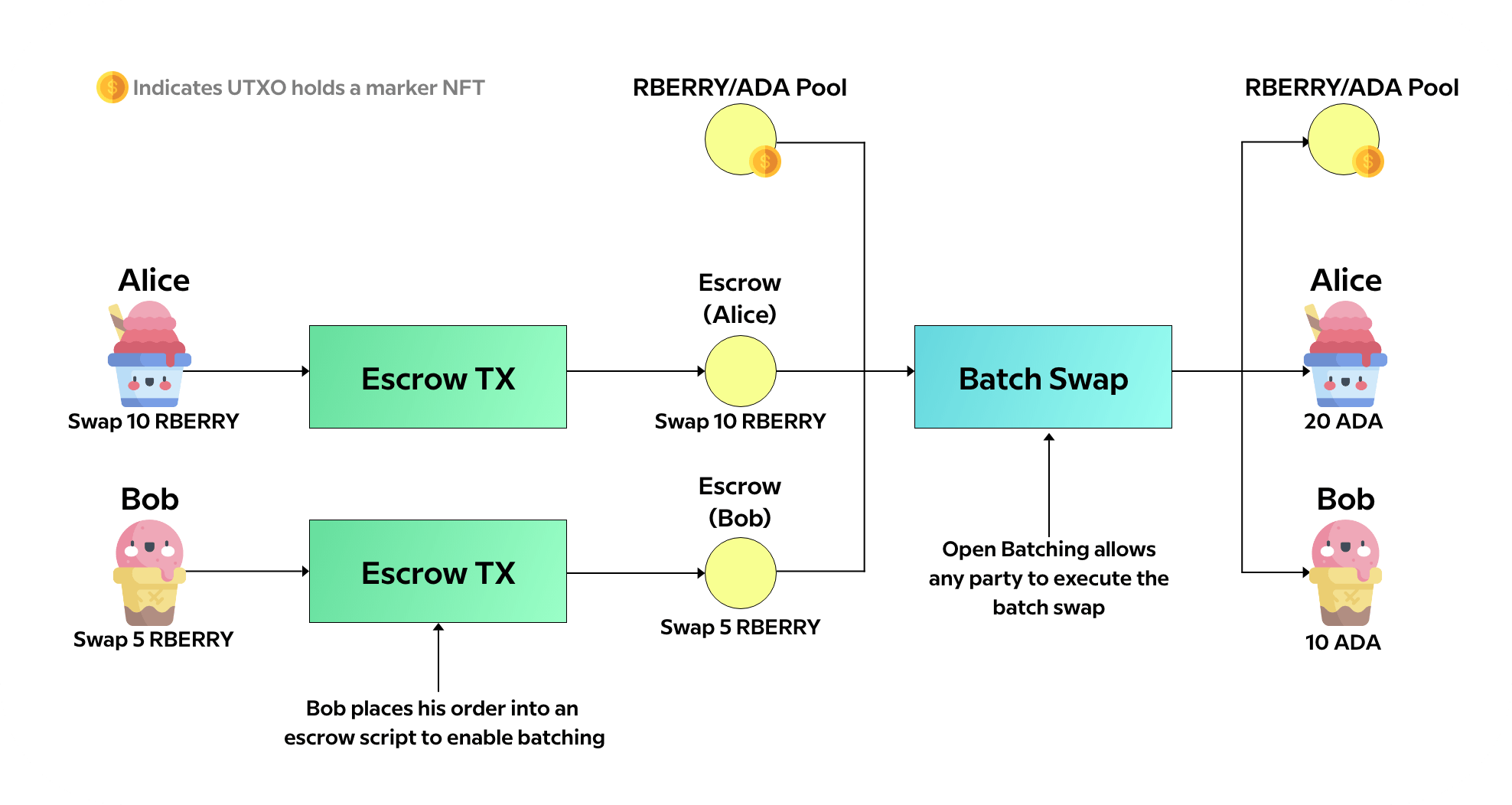

Open Batching

The first solution we evaluated, which even appeared in an early draft of our first whitepaper, was open batching. In this solution, a user locks their funds in a script with a description of their order: “Swap X tokens, for at least Y tokens”. This script allows those funds to be spent as part of a swap against the liquidity pool, or cancelled. Then, some third party “batches” or aggregates these transactions into a single transaction. That one transaction “spends” each of the locked orders, along with the UTXO holding the pool liquidity, and disburses the resulting trades back to the original user.

Contention among these batching-bots is acceptable, because it’s not felt by the end user, and the whole protocol makes progress each time any transaction is accepted that includes orders. We started using the term “Scooper” to refer to the role of a transaction aggregator, leaning into the ice-cream theme of our protocol.

If you’ve been following along with the community, this solution is most similar to the one proposed by Minswap, dubbed Laminar.

Open Batching has many important properties that we tried to preserve. In particular, there is no contention for the end user, only for the scoopers, and it is easy to make this composable with other protocols.

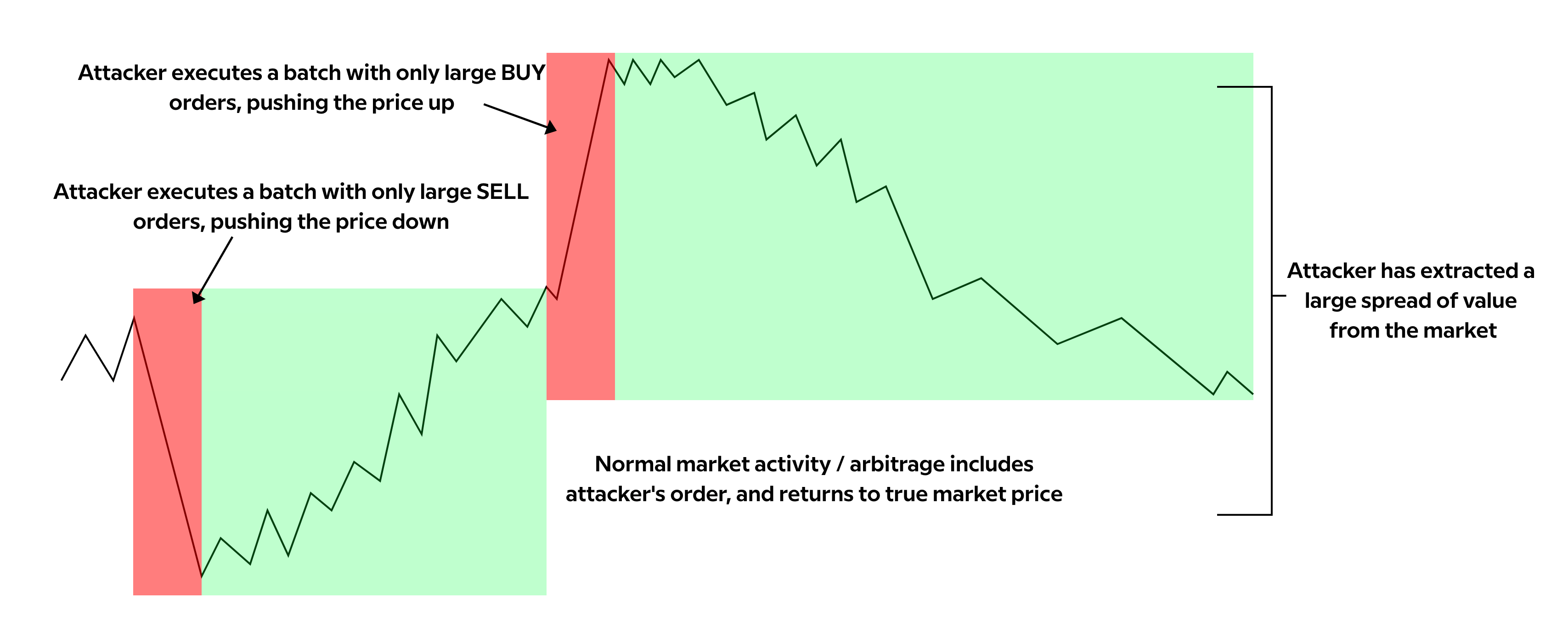

One flaw in this is that it’s susceptible to a denial of service attack: a malicious scooper executes a trade including zero or one orders, delaying execution of other orders in the interim. In an ecosystem of competing actors, this might be ok; however, once you submit one transaction like this, you have the transaction ID before it propagates across the network, and have an advantage in building and submitting the next transaction. A sophisticated attacker can spam the network with a long chain of these transactions and be accepted into the mempool for future blocks fairly easily. This attack, which we’ve been calling a “trickle” attack, is very cheap to execute, and brings deeply traded markets to a complete halt.

Another flaw in our view is its very high vulnerability to miner-extractable-value. As the ecosystem grows and people build custom scooper applications, they could censure and reorder the market in ways that profited the party choosing which transactions to include. For example, consider the notion of a “sandwich attack”: process a large number of buy orders to push the price up, then a single sell order from their own wallet, and then a large number of sell orders to bring the price back down. The attack can also be executed in reverse. For more on “miner extractable value”, here is one of our favorite articles on the subject.

There are a couple of ways that this attack can be mitigated: You can enforce some deterministic ordering, you can make your batched trades order independent, or you can have many competing aggregators. In each case, however, executing a sandwich attack is still fairly trivial to pull off. Even in the case of many competing aggregators, for example, you can execute a sandwich attack, since the honest actors will likely include a mix of buy and sell orders that don’t move the price back down as quickly as you pushed it up.

Since the SundaeSwap protocol seeks to be a backbone of DeFi on Cardano, these risks were enough to overpower the other desirable properties of this solution.

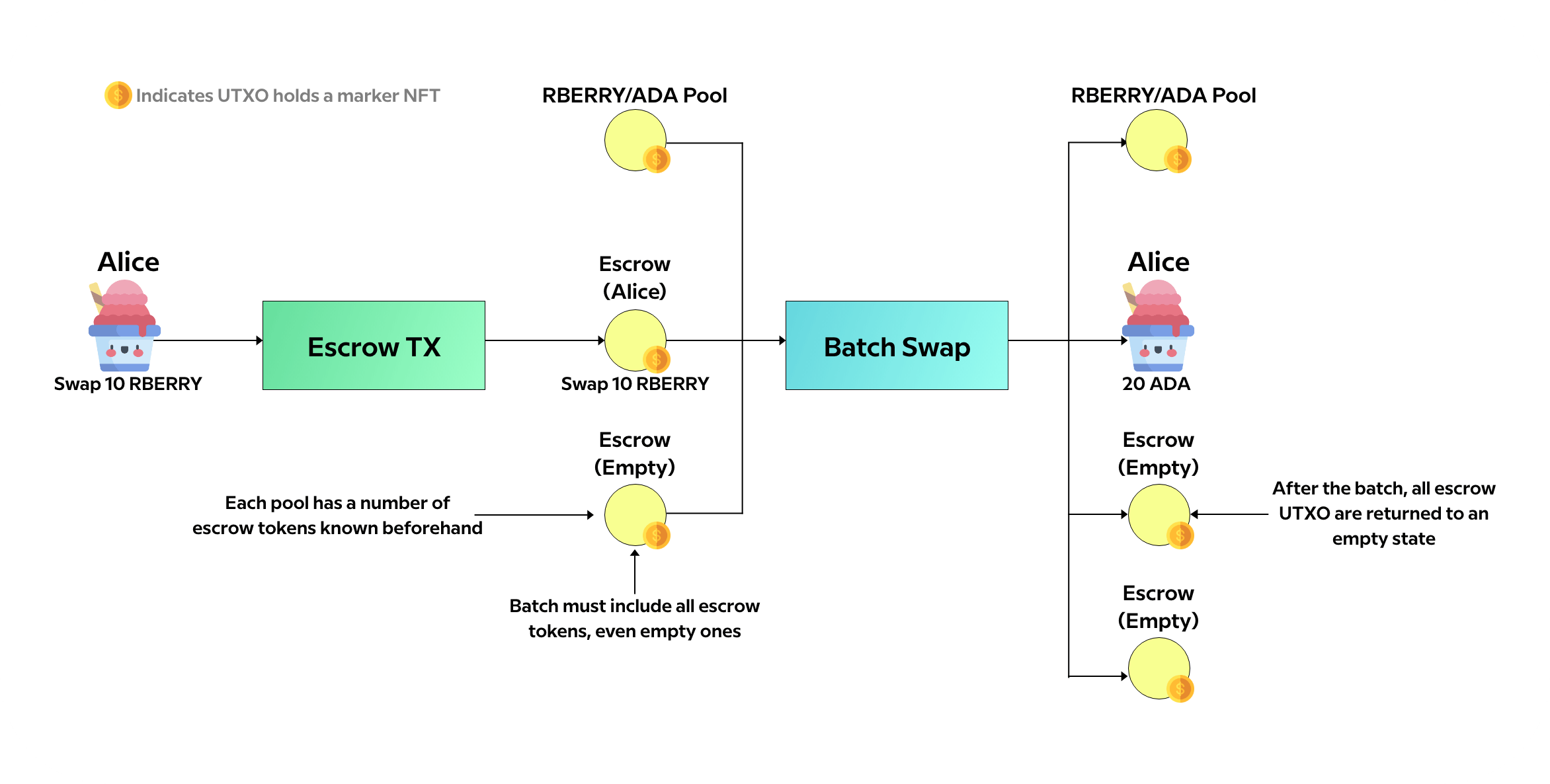

Escrow Tokens

The next solution we evaluated, and had high confidence in for quite a while, was a simple extension of the first. By issuing a number of tokens into scripts that users “spent into” to queue their order, the pool could know how many tokens it needed to witness. This prevented market censure. Combined with a way to make the swap calculation order-independent, this eliminated all MEV. Users would spend their funds into an “empty” escrow token, which would get included in the next batch. There would be some contention among users who accidentally chose the wrong escrow, but with enough escrows, this would be rare, and would provide a similar experience to occasionally having to retry your online credit card transactions.

This solution bears the most resemblance to the solution proposed by Meld, where they reference “Reserve Tokens.”

For those curious, Escrow Tokens was included in the SundaeSwap protocol which was in the demos we began showing to VC’s behind closed doors in late July, and which featured in our public demo event on CCV Dan’s channel.

Unfortunately, this solution crashes head first into the sizing limits of the Cardano blockchain. The probabilistic nature of the argument above depends on hundreds of escrow tokens, and in practice, our first implementation of this was hitting the Cardano protocol limits with 5 or 6 escrow tokens, before even adding in features like governance.

Additionally, it is very cheap and easy to occupy these escrow tokens with illegitimate orders, effectively creating a denial of service for the bulk of legitimate traffic.

Finally, there is contention among the scooper and the escrow tokens, destroying a lot of the nice scalability properties anyway.

One extension we explored to increase concurrency and defend against trickle attacks similar to the previous section was the notion of phasing. We would mint a rectangular grid of escrow tokens, and force the pool to process an entire column at once. There are many more tokens for users to interact with, meaning there is less likelihood that someone experiences contention, and less opportunity for someone to execute a trickle attack.

Ultimately, with the marginal gains this gave us because of Cardano transaction limits, and the ballooning complexity of the solution, we felt that it was better to abandon this and look for simpler trade-offs we could make.

Mixed Escrows

Before we moved away from escrow tokens entirely, however, we considered one last extension: mixing both token-holding escrows and token-less escrows. By allowing a mixture of tokenized and untokenized orders, we could provide a base-line guaranteed order flow, if a user was willing to retry until their order got in, while also allowing arbitrary concurrency to most casual users.

Unfortunately, this suffers from some of the same denial of service vulnerabilities as the ‘all Escrow Tokens’ solution. Under the resource limits of Cardano transactions and our quickly approaching development timeline, we did not feel we could deliver a compelling, fair, and safe set of incentives around the protocol in time to launch, and so we abandoned this approach.

This is one of the approaches we may revisit in the future when the Cardano transaction limits change, or when we have time to devote to thoroughly exploring the right incentive structures to prevent denial of service.

Programmable Orderbook

Another idea we explored was the notion of a full and configurable order-book. In this model, users lock their funds in a script which encodes the criteria for their order: trade at a specific price, dollar cost average, etc. A key tenant of this approach is the lack of a market maker. Market participants operate completely independently of one another.

This appears to be most similar to the solution described by Maladex.

At first glance, this approach offered a number of properties that were appealing to us:

- No contention to submit orders

- Programmability

- Composability

The Maladex paper does an excellent job of describing these advantages, so we won’t bother to rehash it here. However, as we explored the model, a number of issues came to light. Almost all of them stem from the lack of a market maker to ensure orderly execution. Broadly speaking those issues are:

- Inefficient use of liquidity

- Difficulty handling large orders

- Blockchain congestion

- Order erosion

- Market depth requirements

- Fee Market

Inefficient use of liquidity

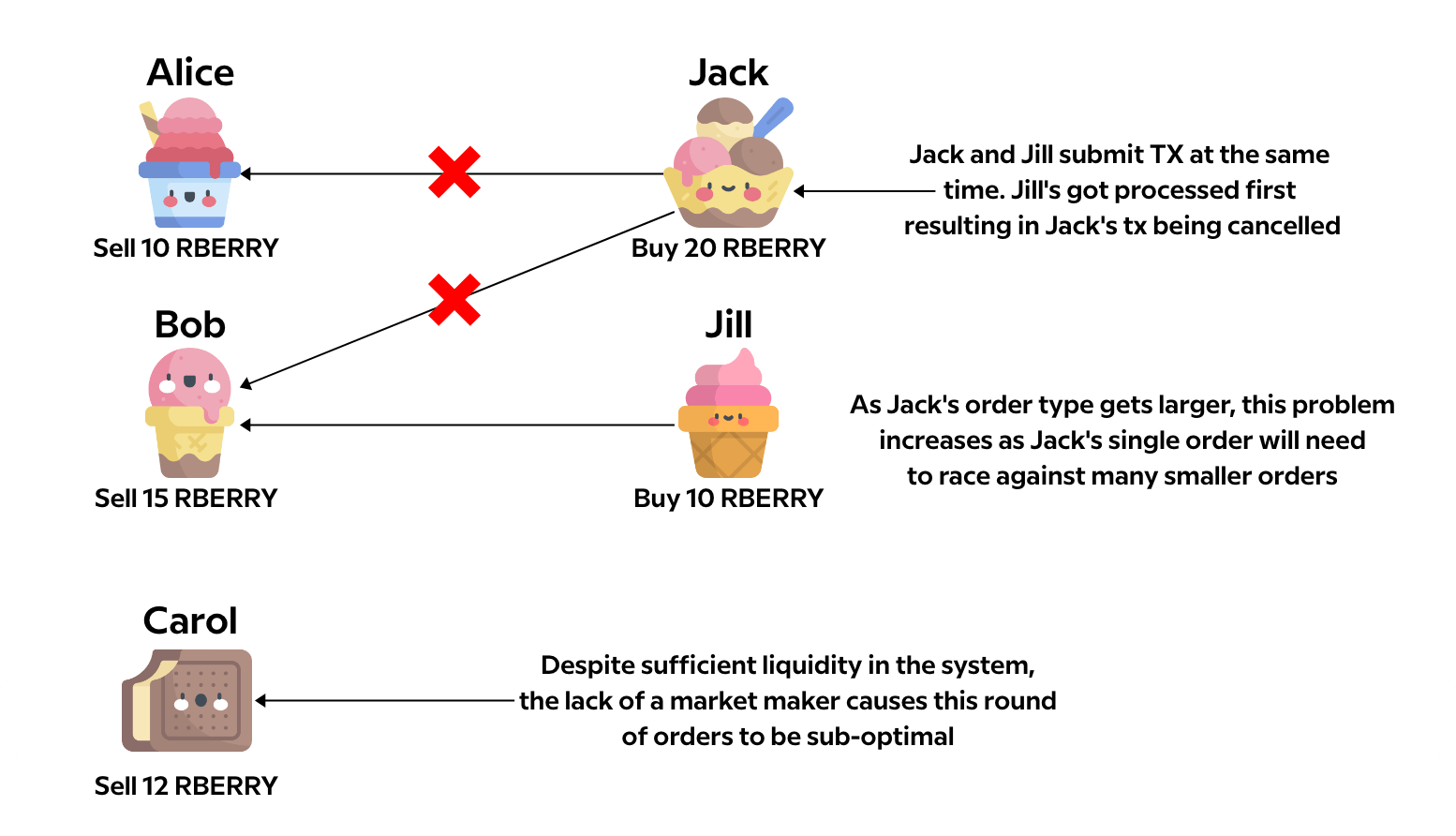

Consider the following example where Alice, Bob, and Carol are providing various amounts of liquidity to the market. Jack and Jill would both like to execute swaps against that market. And in this example, the market has sufficient liquidity to fill both Jack and Jill’s orders. However, unlike a market maker, which could ensure both orders are filled, with a pure orderbook, whether or not the orders are filled depends on which offers Jack and Jill attempt to match against.

Because Jack’s order is larger, he must match against multiple orders to obtain sufficient liquidity to fill his order. Jill, with her smaller order, can fill against a single order. In the above example, both parties decide to include Bob’s order in their match transaction. And unfortunately, only one of the orders, in this case Jill’s, will be filled. But can’t Jack just re-submit his transaction against Alice and Carol? Yes, but this leads us to the next issue ...

Difficulty with larger orders

Jack would, of course, resubmit his transaction using a different set of UTXOs. However, he would face the same problem in the next round of transactions. Because he’s attempting to match against a small number of UTXOs in this example, his transaction is likely to go through the next time. However, this illustrates a general problem with pure order books. As the size of an order increases, the likelihood of being able to match the order decreases exponentially.

Suppose Jack had a very large swap that required 12 other orders to fill. Each of those 12 orders may have other people attempting to match against those orders. In order for Jack’s swap to be filled, he must win all 12 races. To win any one race, Jack should have an even chance. To win all 12 would be difficult indeed.

Jack has two solutions for this: (a) divide his order into many small orders or (b) attempt to reduce the number of orders required by finding matching orders. If he attempts to divide his order, he runs into the next issue, Blockchain congestion.

Blockchain congestion

So instead of a single order that requires 12 transactions to fill, Jack could instead create 12 orders that each match against a single order. Doing so today, Jack would run into the following issues:

- Fees: Instead of paying for a single transaction, Jack is now paying for 12 transactions. And unlike Robinhood trades which are Free™, swaps on the blockchain do incur a cost

- Network Congestion: While this is configurable, today the maximum size of a single transaction is 16k and the maximum size of a block is 64k. Attempting to fill 12 times the number of orders on the blockchain (where each order would need to include a full copy of the validator script) would significantly contribute to a worsening experience for the entire community. And even in a post Hydra world, this 12x capacity loss would have a significant impact.

So if splitting the order is problematic, perhaps Jack could go the other way and match against fewer larger orders. This leads to the next issue, Order erosion.

Order erosion

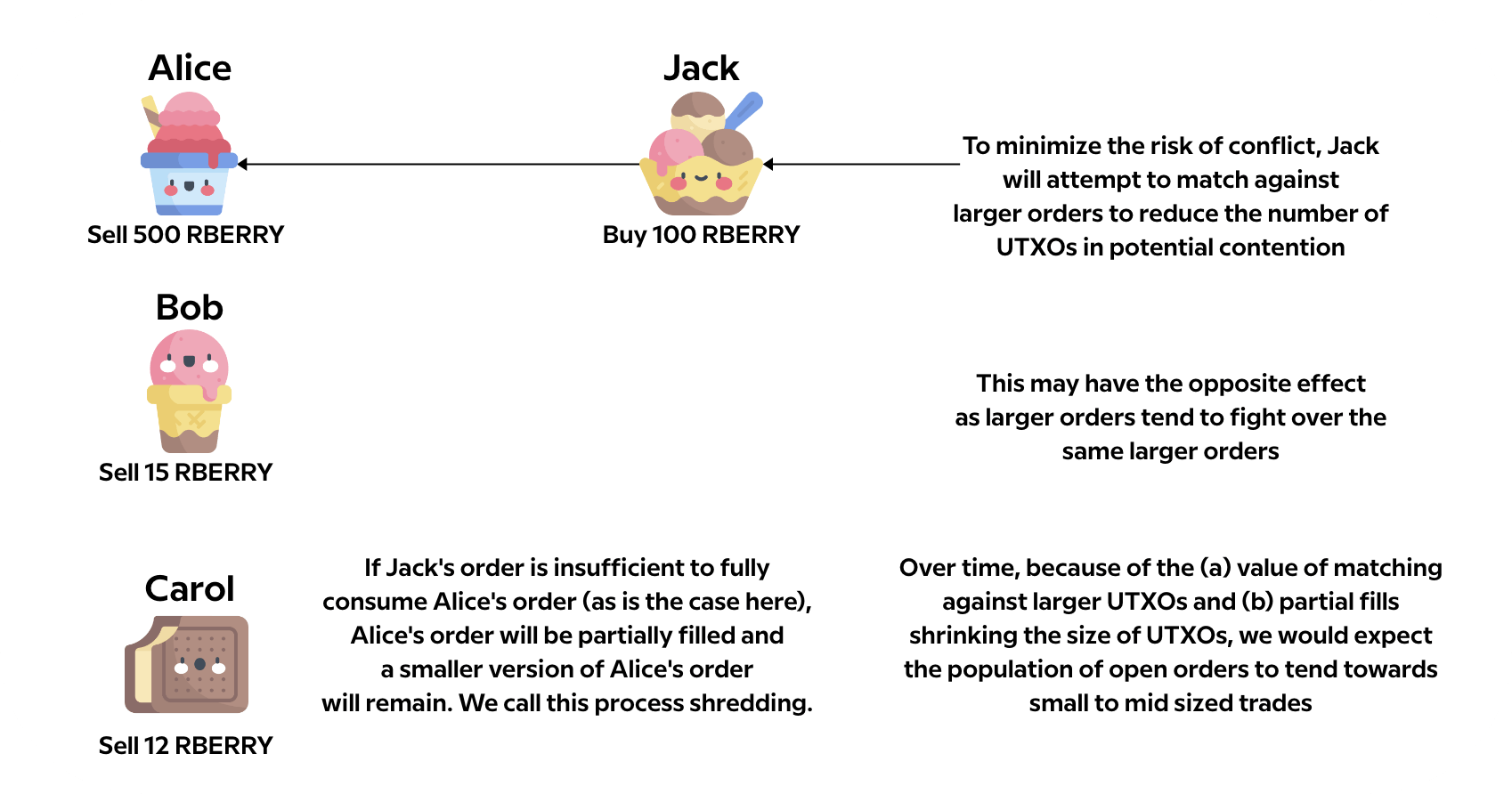

Because splitting an order has many disadvantages today (fewer in Hydra), Jack may attempt to match his large order against other large orders. The problem here is that many other large orders will attempt to do the same. It’s in Jack’s interest to find an order that’s larger (or the same size if he can find it) than the order he’s submitting.

Multiply this behavior by the entire community and you would expect large offers of liquidity to quickly be eroded into small offers of liquidity via partial fills. So some participants will be able to match their large order against other large orders, but most will not.

Market Depth Requirement

The next issue brings us to the reason that market makers came to being in the first place, to provide liquidity to thinly traded markets. Markets with sufficient outstanding orders (liquidity) can readily match bids and offers and so the market appears fluid to participants - e.g., NASDAQ.

But as the liquidity in the market decreases, the number of orders that can be filled decreases to the level where no orders can be filled. At this point, the market has essentially become illiquid and a significant spread will appear between bids and offers resulting in far fewer additional trades being matched. Fewer trades are matched because a large spread forces one party to accept a poor offer in order to fill a trade.

Market makers solve this problem by always offering to take the trade. In the case where there’s a bid and ask, the offer will likely be between the two, but, more importantly, the market maker will take the trade even when there are no bids or asks, ensuring that market participants can always enter or exit the market.

Most pools on decentralized exchanges are thinly traded. Uniswap, for example, has over 8,000 trading pairs. If we rank the pools by trading volume and examine #30, we see only a couple of trades per hour. Consequently, we felt that a pure orderbook without the support of an AMM would be a poor fit.

Fee Market

The last issue pertains to how orders are executed. There are two basic models for execution, either (a) the submitter of the order executes the order or (b) they allow other parties to execute the order.

If the submitter executes the order, then they are required to manage an off chain order executor that runs until the order is filled. This is a rather high bar that I doubt most market participants would want to go through.

This leads to the second case, allowing other parties to execute the order, as being the most likely. Because other parties are executing the order, the submitter must both incentivize them to execute the order and trust they execute their order fairly. Order executors, on the other hand, would likely have a different set of motivations, the primary one being to maximize their value received. One way to maximize the value received would be to prioritize those orders that provided the highest incentives. Orders that wanted to be executed earlier than other orders would include a higher incentive and hence a fee market would be born. And with fee markets come miner extractable value and sandwich attacks as described above.

Resolving this issue and the other issues we’ve discussed leads us to the solution that we’ll be launching with, a hybrid AMM Orderbook.

Our Solution: The Scooper Model (AMM-Orderbook)

Finally, we come to the solution that we have chosen to go with for our launch. We believe it preserves many of the positive properties of the orderbook discussed above, without compromising on some of the criteria we believe will be critical to an early, leading DEX on the Cardano Blockchain.

Internally, we have been calling this solution AMM Orderbook. It stems from the same insight that underpins proof of stake protocols: by aligning incentives and creating systems of self-governance, you can scale a system by building trust into the protocol.

Like a pure orderbook, market participants can place good-until-canceled orders onto the blockchain. These don’t require interacting with any pre-existing entities, and so don’t suffer from the UTXO contention problems in other protocol designs. Using a programmable API, these orders can be tailored to the market participants desires. However, unlike a pure orderbook, the liquidity pool can rely on the orderly and efficient execution of swaps enabled by the automated market maker.

Similar to the protocols described above, we rely on a third party aggregator. It’s worth spending a moment recalling some properties of these third party aggregators, as this article is fairly long.

Internally, and as a company that loves a theme, we’ve been calling these actors “Scoopers”. A scooper builds and submits a transaction which executes many swaps against the automated market maker, and in return, collects a small ADA fee. As described in greater detail above, this role has an outsized amount of power, even when adhering to the rules of the automated market maker script.

However, if you can somehow establish a limited amount of trust in the aggregators, many of the challenges in each of the above protocols disappear. If you can trust your aggregators to consistently and fairly choose which orders to include, you no longer need escrow tokens, for example. You can focus on that contention-less on-ramp, and on protocol composability.

So how do we ensure that our scoopers are honest?

The first step is choosing trusted members of the community to run them. Cardano is blessed in that it already has a diverse set of participants with the reputation and pedigree for this. Thus, just as we select stake pools to partner with for our ISO, we (with the help of the community) will also be selecting stake pools to run these scoopers.

Later today we will be sending out a sign-up form for stake pool operators to apply to be a part of the ISO, and to run a SundaeSwap scooper. After filtering this list based on some simple criteria like social media presence and experience, we will turn things over to you, our community. We will be hosting a week-long vote to choose the initial set of scoopers.

At launch, the SundaeSwap DEX will assign 30-day scooper licenses to the stake pool operators above, which can be used to construct and aggregate swaps from the SundaeSwap community. Each time they do this, ADA transaction fees are locked in a script.

After some period of time, those scoopers must renew those licenses, claiming the transaction fees on the way.

If, however, governance decides (via a vote) that one of these scoopers is a bad actor, that license can be revoked, and the ADA transaction fees will be routed by the protocol to the treasury instead.

For example, someone can run a reference implementation of the “order selection algorithm,” publishing the results on IPFS for everyone to see. It’s possible for strange network conditions to create small deviations in this among scoopers; but if a scooper consistently and dramatically deviates from this, a vote can be called to revoke their license and deny them both the ADA transaction fees and access to the market that would have motivated the bad actions in the first place.

In this way, assuming that the collected ADA rewards are material, the scooper is highly incented to remain honest to claim those rewards. If the collected ADA rewards are small, then the market is thinly traded and the amount of value they can extract out of the market is also too small to rationally justify the effort needed to act badly.

Over time, we see the DAO as upgrading this system with more dynamic election structures, where the Sundae token serves a similar role to ADA in selecting and thereafter rewarding or punishing the set of aggregators. This will need to be done with care, but that growth is aligned well with the growth of our protocol as a whole.

Benchmarks

Part of the reason why we held off releasing this article for so long was because we wanted to come to the table with receipts: we wanted to conduct a large scale load test of our protocol. In this way, we could establish a theoretical upper limit on throughput and make some educated estimates about the average throughput we will see.

As a point of reference, we chose the Uniswap v3 protocol to compare against.

Querying Etherscan for transactions involving the Uniswap v3 router contract for the first few days in November, we see:

- 11/01/21: 24,477 transactions

- 11/02/21: 27,961 transactions

- 11/03/21: 28,203 transactions

This works out to an average of 26,880 transactions per day, or roughly 18.66 top transactions per minute. Given that in August of 2020, Uniswap was advertising 100,000 transactions in a single day, many of these top level transactions likely represent multiple operations, so their throughput could be closer to 70 transactions per minute. Given our focus on building the DEX, this was a sufficient approximation for us, and so we didn’t dig deeper to get exact numbers.

Considering the popularity and size of Uniswap, anywhere approaching that throughput would be a monumental achievement for SundaeSwap’s first protocol version.

That being said, keep in mind that the results below represent ideal conditions, in low-traffic testnets. The actual throughput will be less as we share the network bandwidth with other protocols, and reinforces how critical the race to a Layer 2 solution will be. Our hope is that this solution serves the market need today, creates a rich and thriving ecosystem, and sets the stage for the SundaeSwap protocol to play an even more central role as we scale into Layer 2.

We conducted two primary tests that we want to share with you today. Both tests executed market operations as fast as possible, and stopped after reaching 1000 operations processed.

The first was run on our private developer testnet. As part of an ongoing discussion with IOG about transaction limits and protocol parameters, we wanted to explore the impact of transaction size on protocols. To this end, we dramatically increased the block CPU and Memory limits so that the limiting factor was transaction size, and then increased the transaction size limit from 16kb to 18kb, and the transaction memory limit from 10 million units to 30 million units.

With these parameters, we were able to execute all 1000 swaps in 8 minutes, for an average throughput of 120 swaps per minute. This is 170% of the 70 transactions per minute estimate for Uniswap above. This case is optimistic, but shows some of the upward growth potential for Cardano as a whole.

Second, after IOG increased the memory limits from 10 million to 30 million units on Alonzo Purple, we ran the same load test there. Under these more pessimistic conditions, we achieved an average throughput on all 1000 operations of 43 operations per minute, roughly 60% of our higher uniswap estimate.

This also gives some interesting insight into the real-world performance and potential of the Cardano protocol. IOG has been, understandably, conservative about various transaction limits. However, comparing the current block sizes to the numbers cited in their research, it’s possible that this block size could increase up to a factor of 32 times bigger. We haven’t run the test, but some napkin math places our maximum throughput under those conditions between 1,300 and 3,800 transactions per minute! Impressive for a layer one solution. And that’s not the only dimension where improvements can be had. It speaks to an exciting future for Cardano.

Finally, the beautiful thing about this protocol is that the end users faced zero contention. Other than times of extreme network congestion, it should be extremely rare for the queuing of an order to fail, something that makes for a terrible user experience. Thus, even in times of extreme load, while the latency of your orders might increase, all users will continue to enjoy uninterrupted service.

Given that we will likely have to share the network capacity with other protocols, the actual numbers at times of peak congestion will likely be lower than this, and so we want to temper expectations. A layer 1 DEX will serve as a key source of settlement liquidity, and will meet a very critical demand in the Cardano ecosystem early on; the bulk of retail traffic, however, will ultimately gravitate towards a cheaper, faster, more scalable Layer 2 solution, and this remains a key part of our roadmap, which we’ll be excited to share with you in the future.

P.S. For those curious about the nitty gritty details, we’ve published a summary report with a link to the Cardanoscan explorer, and a list of every transaction involved in the load test. We will continue to publish results for high-profile load tests and experiments in this repository.

Conclusion

Hopefully this article gives you some useful insights into how we have been thinking about the design of our protocol, and the careful measures we have been taking and continue to take to fairly and objectively weigh a number of solutions. We’re very excited about the SundaeSwap DEX launch, and confident in our protocol’s ability to meet the demands of the early Cardano ecosystem.

While we have a few intense weeks ahead of us and we aren’t ready to give hard dates to anything in particular just yet, here’s what we have in store for the very near-term future:

- We will start the selection process for the stake pool operators by publishing a Google Form for stake pool operators to apply through. If you emailed us previously, you will need to apply using the official form once it is available, but we’ll send you an email with a link to the form as a reminder.

- Our public testnet will begin. Once this is up you’ll be able to see our scaling solution in action as well as help stress test the protocol, find bugs that went unnoticed, and be among the first users of SundaeSwap!

- Our audit will conclude, and we will address any feedback they have provided.

- We will launch our mainnet protocol and along with it, the ISO. We’ll be keeping the Beta label while we bootstrap the DAO, up until we’ve had our first successful community vote.

In a few short weeks we’ll be ready to show off what we have been building over these past few months. We hope you are as excited as we are.

The SundaeSwap Team